Making production easier and more efficient by helping to organise, navigate, and enhance audio plus creating new types of programmes that would otherwise be impractical or impossible.

Project from 2013 - present

What we are doing

This workstream aims to unlock new creative possibilities in audio production through the application of audio analysis and machine learning. Recent advances in these fields are allowing us to gain a much deeper understanding of our audio content. This can make production easier and more efficient by helping to organise, navigate, and enhance sound. However, it can also allow us to create new types of programmes that would otherwise be impractical or impossible. For example, we are experimenting with new audience experiences where data is delivered alongside media so that it can be consumed in new and innovative ways.

We work on the cutting edge of semantic audio analysis and related machine learning techniques, delivering advancements in those fields, and making our expertise available to the wider ±«Óătv. We take a user-centred approach to our work by emphasising the ultimate audience experience and by understanding the requirements of our production colleagues. To realise our ideas, we partner with specific productions to create and run pilots and test these through formal user studies.

Our Goals

±«Óătv Notes

±«Óătv Notes is a system for producing, distributing, and presenting synchronised programme notes in real-time alongside live events and programmes. These notes can be seen by both the audience in the venue and listeners at home. After the event, listeners can read the notes alongside the audio/video recording through an on-demand service. We are working with the ±«Óătv Philharmonic to support them in delivering synchronised programme notes to audiences at the Bridgewater Hall, at the Proms, and on Radio 3. We are also exploring how we can use Notes in a variety of creative ways to support events and programmes beyond classical music.

- ±«Óătv Notes - Try ±«Óătv Notes

- ±«Óătv R&D - Introducing ±«Óătv Notes: An Enhanced Listening Experience at the ±«Óătv Proms

- ±«Óătv R&D - ±«Óătv Notes: What We Learned at the Proms

Automatic Tagging of Sound Effects

We are working with the to develop machine learning models that can automatically tag sounds effects. We can use these tags to improve the programme recommendations on major ±«Óătv services, including ±«Óătv iPlayer and ±«Óătv Sounds. They can also be used to find sound effects in unlabelled collections, identify unwanted sounds in recordings, or even to support the creation of subtitles. This work follows-on from the , in which ±«Óătv Research & Development was a partner.

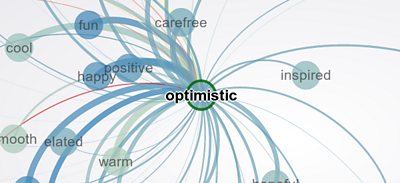

Music recommendation for ±«Óătv Sounds

We are working with Queen Mary University of London to develop techniques to map low-level audio features to high-level musical descriptors. This will help us to improve music discovery and recommendation on ±«Óătv Sounds and support the curation of playlists.

Outcomes

Enhanced podcasts

We developed a prototype “enhanced podcast” interface that displays charts, links, topics and contributors on an interactive transcript-based interface. We worked with the Radio 4 programme More or Less to run a public pilot of the experience on ±«Óătv Taster.

We also worked with Queen Mary University of London to run a formal qualitative study of the prototype using different programmes and in different listening environments. We found that chapters were rated as the most important feature, followed by links, images and transcripts. The features of our prototype worked best when listening at home, but certain elements were valued when used on public transport.

- ±«Óătv Taster - Try Even More or Less

- ±«Óătv R&D - Even More or Less: Designing a Data-Rich Listening Experience

Semantic speech editing

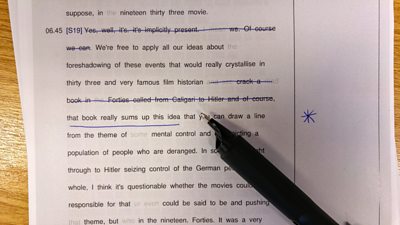

We developed a set of tools that allowed producers to navigate and edit speech recordings using transcripts instead of waveforms. We worked with producers in ±«Óătv Radio to test these tools in action. We found that when using automatically-generated transcripts, producers could edit speech more easily and efficiently than when using existing tools.

Our research also identified key user requirements for annotation, collaboration, portability, and listening. We then developed a novel digital pen interface for editing audio directly on paper. We tested it through a user study with radio producers, to compare the relative benefits of semantic speech editing using paper and screen interfaces. We found that paper is better for simple edits, for working with familiar audio, and when using accurate transcripts.

Project Team

Project Partners

Latest updates

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.

- -

- ±«Óătv Notes - Try ±«Óătv Notes

- ±«Óătv R&D - Introducing ±«Óătv Notes: An Enhanced Listening Experience at the ±«Óătv Proms

- ±«Óătv R&D - ±«Óătv Notes: What We Learned at the Proms

- ±«Óătv Taster - Try Even More or Less

- ±«Óătv R&D - Even More or Less: Designing a Data-Rich Listening Experience

- ±«Óătv R&D - Paper Editor