In the first part of this series I wrote about why we think we should explain how Artificial Intelligence and Machine Learning work, what they’re good and bad at, and what their effects can be. In this second part I will look at some different ways of explaining AI, some different places where we could intervene in the world to explain and some projects which we are running at the ±«Óătv in this space.

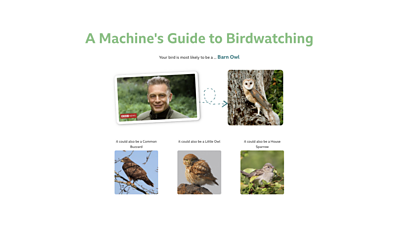

Our bird identification prototype was designed from the ground up to explain things. You can choose a picture to analyse or upload your own pictures. As well as attempting the identification, it lets you find out more about why it identified the bird as it did, and you can start to explore how it works and build up your mental model.

1. Build better mental models

We think that much of attempting to explain AI is about helping people make a mental model of the system to understand why it does what it does. Can we show how different inputs create different outputs, or let users play around with “what if” scenarios? Letting people explore how adjusting the system affects output should help them build a mental model, whether that’s changing the input data or some parameters or the core algorithms.

In our prototype, users can upload their own photos of birds, see the AI’s predictions and learn more about how and why it came to those answers. You can even play with uploading things that aren’t birds…

2. What it does and how it does it

We can try to explain some of the basics of machine learning and AI, like explaining how this technology is based on large amounts of data and learns by example in the training phases. Or show how AI makes predictions and classifications, but only based on what it has learned from. We could also explain what the system uses AI to do.

What are its limitations? Where doesn’t it work so well and what might go wrong? “”, for example, are designed to explain what AI technology is used, where it performs best or worst or what its expected error rate is. These model cards are aimed at developers and interested professionals, but what might be the consumer equivalent?

3. Show (un)certainty

Most current AI systems are based around predicting a result for an input, based on previous examples that have been learned. We should show where there is uncertainty in the outputs, and/or indicate the accuracy. This could be a straightforward confidence label, or a ranking of possible predictions. In the bird ID app we show other high-scoring predictions to the users to help them understand what’s going on.

In our prototype we used words to represent our confidence in the results (“most likely”, “could be”), other systems commonly show confidence as a numerical percentage. But we also show several top possible predictions, not just the top result.

This might show that the prediction was difficult for anybody (all these birds also look the same to humans) or particularly hard for the system (humans can easily see the difference, but the AI can’t distinguish between them). Showing the results might also help the user make a better guess at the correct answer from the possibilities shown, with the AI acting more as an assistive technology.

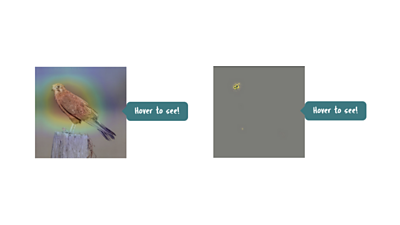

4. Understandable features

Can we find human-understandable features used by the AI, and can we find useful ways to show them? What are the features that are most important in the model and can we use this data to generate human-readable or understandable explanations? This technique is highly coupled to the problem domain — things that are visual are probably easier to show than more abstract problems.

In our bird application we use some to display what the AI focuses on and what detail it “sees” to make its prediction. In the example above you can see that it is focusing on the bird, not the fence post, and particularly on the beak and eye area. As a user you can then start to think about whether it is focusing on an identifying feature of the bird, or some extraneous detail.

5. Explain the data

All AI is based on training data and we can try to explain more about the data used to give insight into the system. Where does the data come from and how was it collected? What are the features used for training? How much data is there? Might it be biased, and towards what?

Here we show an overview of the bird dataset and we explain and show it’s make up. The area of each bird image is relative to the number of examples of each bird in the dataset. As you can see, our dataset appears to be biased towards ducks, starlings and sparrows! We used birds as an example so that we could start to show issues like bias and dataset composition on a relatively neutral subject.

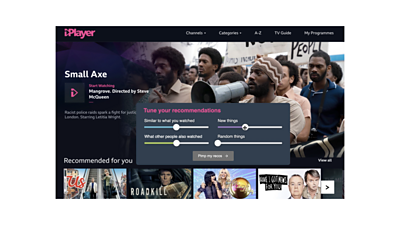

6. Give control…

Testing that we carried out on explaining TV programme recommendations showed that people appreciate being given some control over the AI; being able to tweak parameters, see and edit the personal data that is used by the AI or provide feedback on the recommendations to tune the system. Being able to control the system should also help build better mental models.

Other resources we’ve found particular useful in this work with its focus on explaining AI to users include these sets of design guidelines from and .

Places to explain

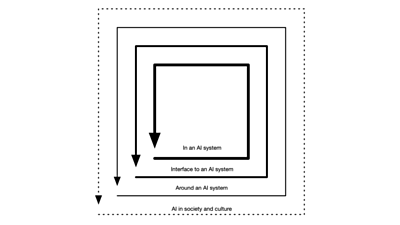

A wider question we have been asking is where in the system (or the world) should you be explaining things, for the best possible understanding. By thinking of the world as we can think about how the whole might be changed.

On the “inside” of this diagram are the type of things that I’ve written about so far in this article…1. Ways to inspect and understand within your AI system; for engineers and more technical users of AI systems.2. Explaining what’s going on in the interface to the AI.

This can be hard though, it’s arguably making the application harder to use and providing additional friction. And that’s assuming there is a legible interface in the first place —given the invisibility of AI.3. Explaining in the context around the AI; you could write a tutorial about the AI in your system or include explanations in the marketing material.

But beyond a particular application or service, there are other layers in society, culture and the world where we could explain AI.

- We could explain AI better in our education system; whether that’s school, college or later in life.

- We could aim for AI to be explained and represented more accurately when it is featured or mentioned in the media, whether that’s in the news or in popular culture like films.

- The to talk about AI how it’s generally understood.

I think, as we go outwards in these abstraction layers, the methods of explaining become further away from a particular instance of technology and become slower to take effect, but maybe become potentially more influential in the longer-term.

What we’re doing at ±«Óătv R&D

We think it's particularly important to help younger people understand AI, they're going to be more affected by it over their lives than most of us. To that end we have been prototyping some games and videos that could be used in educational situations and we’ve been doing outreach work with schools to see how well these work.

Here is an example of AI in the media. You’ve probably seen images like this used to represent AI. But it’s not very accurate, most AI isn’t robots and they certainly don’t write down equations and ponder them. Other frequently used images of AI contain glowing blue brains or gendered robots. We think we could do better than that.

We’re currently working towards making a better library of stock photos for AI with our partners at and the ; images that are more accurate, more representative and less clichéd. Hopefully, eventually, this sort of intervention might slowly seep into the world and change mental models and our understanding of AI in a lasting way.

VQ-GAN, an AI system for generating images, created this when prompted with “machine learning”The ±«Óătv has also developed internal guidelines for AI explainability, focusing on how to make machine learning projects more inclusive and collaborative and interdisciplinary, ensuring that all our colleagues can feed into the design of our AI-based systems.

We have recently published these Machine Learning Engine Principles which describe how to make responsible and ethical decisions when designing and building AI systems.With our and our commitment to responsible AI and to explain our use of AI in plain english we have been exploring innovative ways of explaining AI. We want to raise awareness of the different ways AI can be explained, and kickstart this in different places around the ±«Óătv and elsewhere.

-

Internet Research and Future Services section

The Internet Research and Future Services section is an interdisciplinary team of researchers, technologists, designers, and data scientists who carry out original research to solve problems for the ±«Óătv. Our work focuses on the intersection of audience needs and public service values, with digital media and machine learning. We develop research insights, prototypes and systems using experimental approaches and emerging technologies.