It’s July 4th, 2019. , a sound designer, and , a theatre maker, stand in a grassy field. In response to a research brief from our team, they’re improvising a series of audio recordings that imagine a multi-dimensional world, fanning out across possible universes from the park in which they stand. This is the story of how we transformed those recordings into a playable audio experience.

Looking For Nigel is a prototype multiverse-spanning audio augmented reality (AAR) story for smart headphones. You're invited to locate and interact with a series of alternate realities as you help Nigel find his parallel-world friends. It's an adventure that uses spatial audio, geolocation, voice recognition and physical gestures to take you on a journey through universes without ever leaving your local park.

Setting the scene

We've been interested in audio-only AR for a while, so we decided to build a prototype experience of our own, to get a better sense of what was necessary: a classic learning-by-doing experiment. What kind of tools are needed? What types of production workflow? What creative approach should we take? By answering some of these questions, we could help colleagues in the ±«Óãtv and elsewhere to think about and develop their own experiences.

We also wanted to continue our research into voice interactions, and follow up some of the promising strands from Please Confirm You are not a Robot. This was our previous AAR project with and of Queen Mary University of London.

Not a Robot had cemented our feeling that understanding embodied interaction (how you use your whole body to interact with an experience) and your body's movement through space were crucial to a successful AAR experience. We particularly admired how experiments by other creators, and , used movement as an interaction mechanism. However, we noted that many of the experiences we tried or discovered in our research were bound to a particular set of GPS co-ordinates - the streets of Whitechapel, say, or Chicago. We felt that anything we made should be free of this constraint to a particular place.

Guided by this core concept, the first order of business was to identify a place/space common enough for a broad audience to have access. This place would have to be within a reasonable distance of their home, safe to roam and, crucially, with features predictable enough to enable a coherent narrative. We decided that public parks would fit the bill nicely.

As with Not a Robot, we decided to continue to use the paired with an iPhone as our target platform for the experience. We were already familiar with development for this setup, and the pairing of the Frames' onboard accelerometer and gyroscope with the phone's movement sensors, GPS and voice recognition would allow exploration of a wide range of user interactions.

With all of these research ideas and goals in mind, we held a couple of workshops with Ben (and his brother, Max), exploring the sonic and interaction potential of the Frames and the creative possibilities presented by our research aims. We sketched out the bones of a story: you would be on a quest to find multiple parallel universe versions of a character, who only you could hear via the Frames on your head. Armed with this knowledge, Ben took Nigel to the park, and they improvised some test material for us, which we would then try to build into an interactive experience.

Beginning to build the experience

To make our imagined experience viable, we needed two things:

- a virtual representation of the player's physical environment;

- the ability to track player movement through this environment, with sufficient accuracy.

A virtual representation of the environment would require knowledge of the player's location and a method to access data about their surroundings. While GPS is a tried-and-tested technology for location detection, our initial instinct was that it wouldn't track movement with sufficient sensitivity. Nor, it turned out, did using the Frames' accelerometer to do the age-old technique of '' (determination of position without 'celestial aid' like GPS), since the data was insufficient to track step-by-step movement.

Our next step (excuse the pun) was to explore harnessing the system pedometer available on most smartphones. This was straightforward enough to access, but only triggered after every few steps, and provided no indication of the player's direction, nor whether they were simply stepping forward and back repeatedly!

Thus we came full circle to GPS as the most viable method to track player movement through space, while aware that we would need to account for a margin of error. This also had a knock-on effect in the spatial design for the experience; we needed to make sure that no important objects in the virtual world were smaller than the GPS's error margin - around 5 metres on a good day.

Building a mirror world

As we were making a 'virtual mirror' of the player's physical space and using that representation to render spatial audio on the fly, a 3D development environment was essential. We settled on using since, as a well-established 3D engine, it offers access to a deep well of community knowledge and a variety of plugins that make it easy to extend. For these reasons, it's one of the de facto development platforms for VR & AR experiences. Additionally, an SDK (software development kit) for the Bose Frames was already available for Unity.

Our map and location information would come from the SDK. Using this plugin, we were able to abstract away all the challenges of replicating the physical world inside our game by dropping a 'Map' Object into a Unity scene. We could then track someone's movement through this replication by connecting their real-world motion to a Player Object in the same Unity scene.

Mapbox enables pulling in data like elevation, roadmaps and 3D features such as buildings, complete with their correct height. For our purposes, however, we only needed park information, so pulled this in via the Mapbox API and then flattened everything into two dimensions (with GPS we could only usefully track a player's two-dimensional movement anyway).

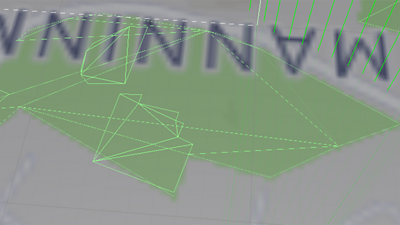

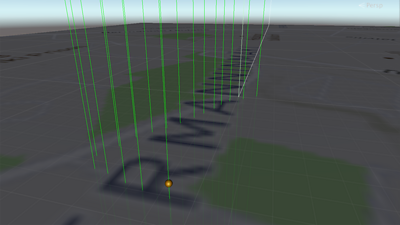

We use a method called raycasting to check if a player is inside a park. In the virtual representation of their surroundings, we project a 'ray' (straight line) downwards from their position. If that ray intersects the shape defined by a park in the map data, we can gather information to perform geographic operations, and thus start the experience.

We can also use this park representation to build a 'wall' around the park, allowing us to respond when the player leaves - initially by firing a warning sound and then exiting the experience completely.

Where are we again?

Our story's narrative revolves around the conceit that only sound can travel inter-dimensionally (while smells only travel temporally - a future research project for the era of , perhaps).

Our next challenge was to position sounds in the virtual space around a player's head to guide them towards set-pieces placed around the park.

Here we turned to , which "." Resonance is also available as a Unity plugin, making it relatively straightforward to spatially locate a sound in relation to the player.

But where to place this sound?

Initially, we explored the idea of delving into the fine-grained Mapbox Data to explore the possibilities of placing sounds up in trees or bushes.

However, we quickly realised that not every park necessarily contained a tree (nor are they mapped or unchangeable - if a tree falls in a park, a map definitely doesn't hear it).

Instead, we decided to place wayfinding sound objects randomly inside the park. We enjoyed the idea that this sonic treasure hunt would encourage individuals to rediscover their familiar parks in a new light.

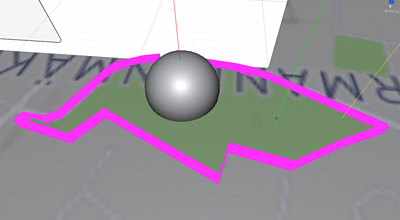

Again, rays are used to find locations for these sound objects inside the park, accepting or rejecting the location depending on a predefined minimum and maximum distance from the player.

The yellow ray is a position that was rejected while the red ray was accepted. The grey sphere is our wayfinding sound object.

Here we faced the problem that although parks are green and open spaces, they sometimes contain buildings or other features that might put a dampener on the player's experience (such as an unexpected body of water…).

To address this, we isolated more features from the Mapbox data. Offending buildings and water were labelled as 'not park' and floated slightly above ground level, like a jigsaw puzzle with some of the pieces not fully inserted. This meant that when we fired our ray down, it would first hit these features and then know to try again.

Rosie and the arrival of narrative structure

At this point, we'd implemented the bare bones of the experience: walking around to find Nigel and a conversation with 'another' Nigel. But something was lacking.

Rather than a beginning, a middle and an end, we had a series of set-pieces. We needed dramatic tension, an arc… and a villain!

Enter , a digital artist and real-world game designer who worked with us as part of her PhD on embodied storytelling at UWE Bristol. Rosie's experience and approach were a perfect fit for this project, and she got to work doing an amazing job of weaving a cohesive story from the disparate parts. She explored embodied interactions more deeply and even introduced our longed-for villain: Evil Nigel.

- In the second installment of this short series - how we built the sound environment for our audio AR experience - and our tips and recommendations for anyone interested in making their own.

- -

- ±«Óãtv R&D - Recommendations for Designing Audio Augmented Reality Experiences

- ±«Óãtv R&D - Designing and Developing Ideas for Audio AR Sunglasses

- ±«Óãtv R&D - On Our Radar: Audio AR

- ±«Óãtv R&D - Audio AR: Geolocated Sound

- ±«Óãtv R&D - Audio AR: Sound Walk Research - The Missing Voice

- ±«Óãtv R&D - Virtual Reality Sound in The Turning Forest

- ±«Óãtv R&D - Binaural Sound

- ±«Óãtv R&D - Spatial Audio

- ±«Óãtv Academy - Spatial Audio: Where Do I Start?

- ±«Óãtv R&D - What Do Young People Want From a Radio Player?

- ±«Óãtv R&D - Prototyping, Hacking and Evaluating New Radio Experiences

- ±«Óãtv R&D - Better Radio Experiences: Generating Unexpected Ideas by Bringing User Research to Life

-

Internet Research and Future Services section

The Internet Research and Future Services section is an interdisciplinary team of researchers, technologists, designers, and data scientists who carry out original research to solve problems for the ±«Óãtv. Our work focuses on the intersection of audience needs and public service values, with digital media and machine learning. We develop research insights, prototypes and systems using experimental approaches and emerging technologies.