Since our IP Studio project started in 2012, ±«Óătv R&D has been focused on bringing about the transition of broadcast production systems to IP. We’ve talked on this blog before about the many benefits of moving to systems based on IT industry technology. We’re now starting to see a real uptake of these ideas, with the new broadcast centre we’re building in Cardiff set to be the first ±«Óătv building built around an IP core.

±«Óătv Cardiff Central Square under construction.

Much of this work has been going on at the same time as the IT industry has been undergoing a revolution of its own – the transition to cloud computing. In the Cloud-Fit Production team, we’re looking at how broadcast production processes can harness the flexibility, scalability and resilience benefits cloud computing has brought to the IT industry. In previous blog posts, we’ve talked about our Cloud Media Object Store, which we’re basing much of our work around. We’ve also looked at ingesting video into the store “as a service” using our File Ingest Service.

Push and Pull - Joining it all up

Our Media Object Store is based around the idea that media is stored as “Objects”, which can be requested from the Store by clients in any order, at any time. This “pull-based” way of handling media is very different from the “push-based” model of stream-based protocols like RTP, of which is an example. In the stream-based approach, servers send a continuous stream of video to the client, whereas in the pull-based model, clients request media objects from the server as and when they need them.

The “push-based” approach works well in the high speed, low latency networks commonly found in a major broadcast centre. But this relies heavily on tightly constrained network conditions which are very hard to achieve in the cloud, where we have little or no control over the network conditions. In the cloud, it is important to , for example, by using multiple and . Such techniques are hard to apply to the point-to-point nature of streams but far easier to apply to the pull-based model of our cloud-fit production architecture.

What is SMPTE ST 2110?

SMPTE ST 2110 is a family of standards for carrying audio, video and ancillary data over IP computer networks. Unlike many IP video formats, the currently deployed generation of SMPTE ST 2110 standards and equipment does not compress media, meaning it is best suited to environments where low latency and high quality are more important than bandwidth limitations.

This means it tends to be used mostly in local networks in live broadcast production environments rather than over the internet, as the high levels of bandwidth and latency control required are easier to achieve.

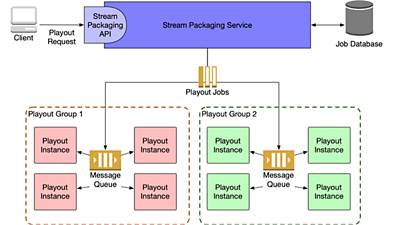

Playout jobs are allocated to Playout groups depending on the kind of instance required to play them out.

The ability of stream-based protocols to provide low latency at very high data rates mean they will probably be with us for a long time yet, connecting much of the physical infrastructure in conventional broadcast centres. Over the last few months, the cloud-fit production team has been investigating what the interface between these push and pull domains looks like, to understand how media can flow seamlessly between the two.

To support this investigation, we’ve been building the Stream Packaging Service. The Stream Packaging Service is designed to pull media from a Media Object Store and then stream it out in a conventional push-based stream format.

Simplified Stream Packaging Service Architecture Diagram

Stream Packaging Service Architecture – Not All Streams are Alike!

We knew from the start that we wanted Stream Packaging Service to be as extensible as possible. An important requirement was to use the same service for coded video (e.g. H.264 streams) as we did for un-compressed production quality video (e.g. ). But we also knew it would be difficult to use exactly the same codebase to do all these jobs. To solve this, the service is architected to have a high level “Stream Packaging API”, which allocates jobs to different pools of “Playout Instances” depending on the requirements of the job.

Playout instances are specialists, having specific software, or even hardware, depending on the job they are designed to do. For example, a playout instance for streaming H.264 may exist as software running on a relatively small computer instance on a remote cloud. In contrast, a playout instance for may be , with specialist network hardware. In theory, a playout instance could even be used to play out to baseband formats like SDI.

Like our other services, jobs are submitted to the Stream Packaging Service by making a request to the REST API. Jobs are stored in a central database so they can be logged and monitored. The service then places the job on a highly available queue corresponding to the Playout Instance Group to which the job should be assigned. Playout instances remove jobs from this queue when they are available and begin processing them. This architecture helps ensure the service itself is highly available by ensuring a job will always reach a playout engine if one is available in the group.

Sign up for the IP Studio Insider Newsletter:

Join our mailing list and receive news and updates from our IP Studio team every quarter. Privacy Notice

First Name:

Last Name:

Company:

Email:

or Unsubscribe

Doing it Quickly

The Stream Packaging Service posed the Cloud-Fit team some interesting challenges. Firstly the media from the Store can be (and often is) stored as raw data without compression. In the case of HD, this results in data rates in excess of a gigabit per second (Gb/s), with UHD data exceeding 8 Gb/s. Such high data rates require efficient data processing and well-optimised code. This data either needs to be compressed on-the-fly or passed as-is at full rate to a Network Interface Card (NIC).

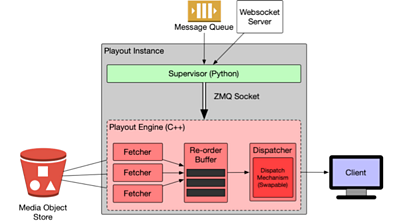

Most of the existing code for the Cloud-Fit production project is written in Python, but as an interpreted, high-level language we knew it would be difficult or impossible to achieve the data throughput required for raw video. To address this problem we decided to split the Playout Instance into two halves - a “Playout Engine” would be written in C++ and do the actual video processing, with a Python supervisor acting as the interface with the rest of the system.

C++ is a lower level, strongly typed language. This makes it easier to write the performant code needed to process video – as a team, we were already familiar with it from our previous work on the IP Studio project. However, we wanted the higher-level work of managing the job to be done in Python, as it would not require the performance of the C++ code, and is generally faster to develop.

The Playout Engine itself comprises of two principal blocks. A “Fetcher Engine” runs multiple “Fetchers” in parallel to get data from the Store, before placing them into a “re-order buffer”, so that media objects can be re-ordered back into sequence. Objects are then pulled out of this buffer by the “Dispatcher” component. The implementation of the Dispatcher component varies depending on the kind of Playout Instance. In our first trial, we are using FFMPEG in our dispatcher to code streams as H.264 for us, but we plan to have different Dispatchers in future capable of producing SMPTE ST 2110 and other formats.

The Python supervisor meanwhile fetches jobs to be completed from the message queue corresponding to its pool. It then retrieves the list of Objects for the job from the Store. It then passes the location of the Objects to the Fetchers via a to be fetched and dispatched.

Testing it Out

So far we have completed our first Playout Instance, with a Supervisor and Playout Engine for playing out H.264 streams using . This allowed us to test our Supervisor, Fetcher and Dispatch code without the need to operate it on systems with hardware support for SMPTE ST 2110.

During our tests, we used a hybrid cloud approach to deploying our Media Object Store, with the API and database hosted in a remote cloud, but the S3 compatible object bucket hosted on our local Openstack Cloud instance. We proved that we could place a message on the service’s high availability job message queue, and it would result in the Playout Engine playing the HD video Flow from the Store, which we could receive on a desktop machine connected to the network using the . Playback was smooth and at full frame rate, which was an exciting demonstration that our thinking worked.

We’ve talked before about how the use of a hybrid cloud solution helped us get media files into the Store, and it solves a similar set of problems here. It meant that we had the bandwidth we required to transfer the raw frames stored in the object bucket to the server running our Playout Instance. This would have been hard to achieve with a fully remote cloud architecture and demonstrates the benefit of running a local cloud.

What’s Next?

We’re planning to start work soon on a Dispatcher that will allow us to work with SMPTE 2110, and also building out the Stream Packaging Service API to coordinate several Playout Instances and allocate jobs to them. We also have plans for a Stream Ingest Service so we can perform the inverse process of ingesting push-based streams into the Store.

As usual, we’ll be providing lots of updates as we go along, so keep checking back to see what the latest is from the Cloud-Fit Production team.

-

±«Óătv R&D - IP Production Facilities

±«Óătv R&D - Industry Workshop on Professional Networked Media

±«Óătv R&D - High Speed Networking: Open Sourcing our Kernel Bypass Work

±«Óătv R&D - Beyond Streams and Files - Storing Frames in the Cloud

±«Óătv R&D - IP Studio: Lightweight Live

±«Óătv R&D - IP Studio: 2017 in Review - 2016 in Review

-

Automated Production and Media Management section

This project is part of the Automated Production and Media Management section