is a project which is exploring spoken interfaces - things like , or . Devices whose primary method of interaction is voice, and which pull together data and content for users in a way that makes sense when you’re talking to a disembodied voice.

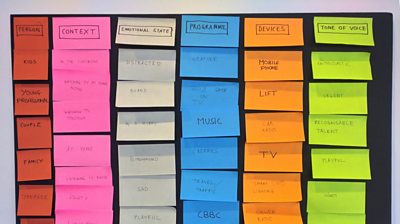

We started at the beginning: what are the elements we need to be able to construct stories for voice UI? We identified a few key elements that, when combined, would allow us to build some example scenarios.

Some of these categories are familiar – people, context, devices. The really interesting ones when thinking about VUI are the emotional state of the person and the tone of voice being used by the device. Speech, unlike screen interaction, is a social form of interaction and comes with a lot of expectations about how it should feel.

Get it wrong, and you’re into a kind of social uncanny valley. In fact, while we were developing this method, more than once we ran into a ‘creepiness problem’ – some of the scenarios, when worked through, just felt, well, creepy. An attentive, friendly personal assistant, present throughout the day, eventually felt invasive and stifling.

A speaking toy for a child, connected to an AI over the internet, swiftly throws up all sorts of possible trickiness. Setting the context and tone for a conversation allows us to think more clearly about how it could unfold.

We filled out the categories with some useful sample elements and set about combining and recombining across categories to create some trial scenarios. This step is intended to be quick and playful - given the building blocks of a scenario, which can you mix and match into something which looks worth developing further?

Script Planning

The next step was to write example scripts for each scenario. This was another quick step; using different coloured postits for the parts of human users and talking devices we constructed a few possible conversations on a board. We’re not copywriters by any stretch, but sticking the steps up allowed us to swiftly block out conversations and move and change things that didn’t look right.

Text to speech testing

Once we’d mapped out our scripts, the next step was to test how they sounded; we needed to listen to the conversations to hear if they flowed naturally. Tom created a tool using Python and the OS X system voices which allowed us to do just this. This tool proved extremely useful, allowing us to quickly iterate versions of the scripts and hear where the holes were - some things which looked fine in text just didn’t work when spoken.

Using text-to-speech does end up sounding a bit odd (like two robots having a conversation), but it meant that we didn’t have to get a human or two to read every tweaked iteration of the script and allowed us to listen as observers. It also made us think about the intent of the individual scenarios - is this how an expert guide would phrase this bit of speech? How about a teaching assistant?

The text-to-speech renderings of the scripts (auralisations?) were giving us a good sense of how the conversations themselves would sound, but we realised that in order to communicate the whole scenario in context, we’d need something more. Andrew developed a technique for rapidly storyboarding key scenes from the scenarios which allowed everyone in the team (not just those talented with a pencil) to draw frames of a subject. More on that technique in a later post.

Building on the storyboards and audio, we were able to create lo-fi video demos – – which simply and effectively communicate a whole scenario. Using video enables us to illustrate how a scenario works over time, and allowed us to add extra audio cues which can be part of a VUI – things like sound effects, alerts and earcons.

Final thoughts

We’re really happy with the prototyping methods we’ve developed – they’ve given us the ability to quickly sketch out possible scenarios for voice-driven applications and flesh out those scenarios in useful ways.Some of these scenarios could be implemented on the VUI devices available today, some are a bit further into the future and that’s great! We want a set of tools that helps us think about the things we could be building, as well as the things we could build now.

We think we’ve got the start of a rich and useful set of methods for thinking about, designing and prototyping VUIs and we’re keen to develop them further on projects with our colleagues around the ±«Óãtv. We’d also love to hear from anyone who finds them useful on their own projects!